FST JOURNAL

AI Strategy

DOI: https://www.doi.org/10.53289/RBCC9405

Embedding AI everywhere and at every level

Wendy Hall

Professor Dame Wendy Hall DBE FRS FREng is Regius Professor of Computer Science, Associate Vice President (International Engagement) and Executive Director of the Web Science Institute at the University of Southampton. She was co-Chair of the UK government’s AI Review, which was published in October 2017, and is the first Skills Champion for AI in the UK. In May 2020, she was appointed Chair of the Ada Lovelace Institute and joined the BT Technology Advisory board in January 2021.

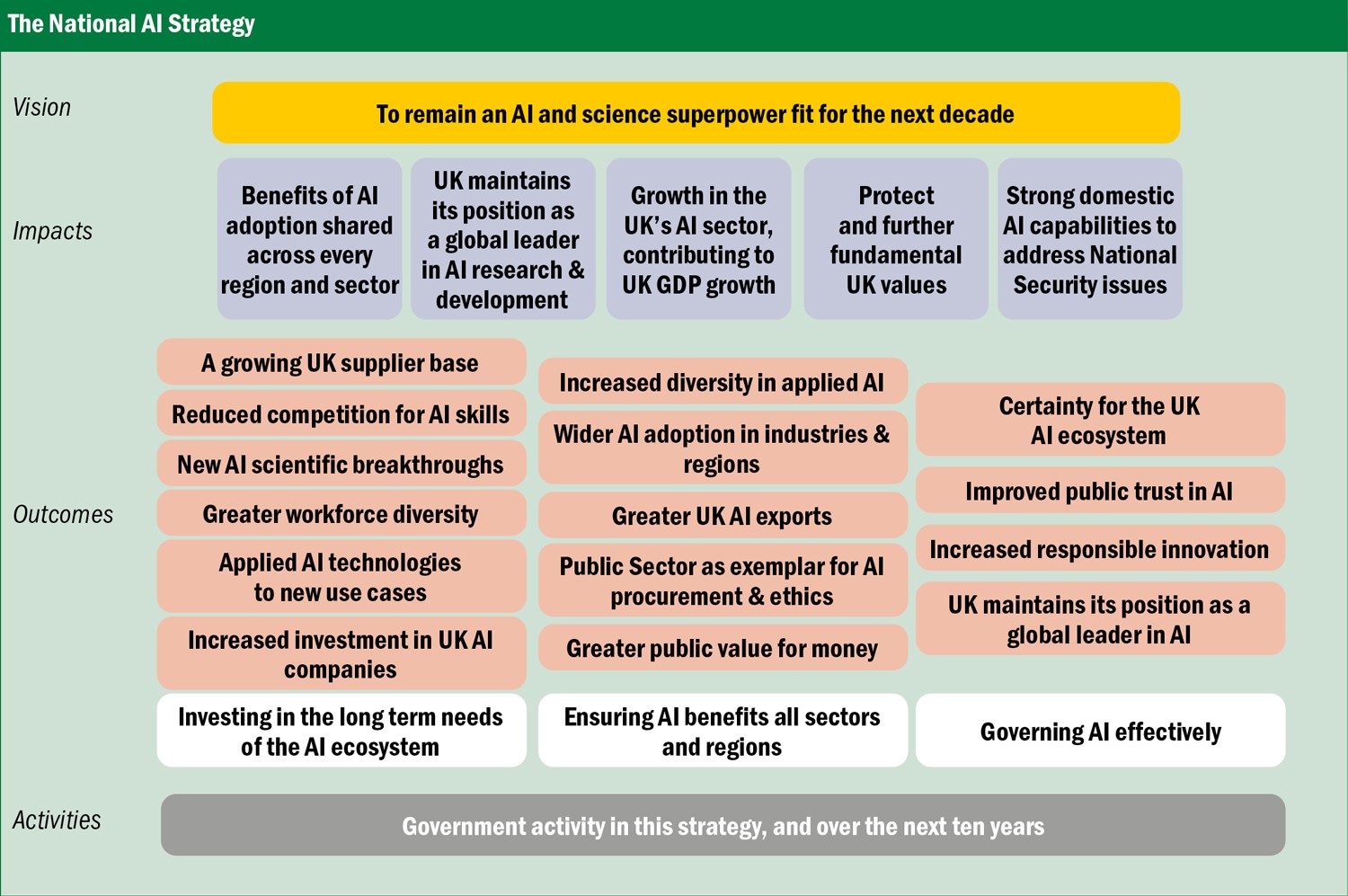

The Hall Pesenti AI Review was published by UK Government in 2017 and it became part of the Industrial Strategy with its own sector deal and council. In 2020, the AI Council was asked to produce a roadmap for the following five years and this was published in January 2021. The Office for AI, working with us and others, then produced what was adopted as the National AI Strategy in September last year.

The UK has been an AI superpower for a long time, although it will never be as big as the USA and China. AI adoption across regions and sectors brings with it an element of levelling up. We aim to maintain the position of the UK, effectively third in the world. For a country of our size that is amazing and it is due to our legacy: we were involved in this area before it was even called AI. All our top universities have very strong AI departments, both broad and specialist. The UK’s startup culture helps generate new companies, bringing growth and wealth into the UK.

The challenge of scale

One challenge is to help those startups become bigger and scale-up. This is something that Britain has to tackle over the next few years. Take your foot off the accelerator and you just go backwards. Every country in the world is trying to be good at AI. Here, we need it to contribute to the growth of GDP. It needs to be developed in a way that protects our values, one that is good for society, good for people, good for business and for Government. It is of course vitally important for defence and security, so we need strong AI capabilities in our security agencies.

The desired outcome for the AI sector is to be developing ground-breaking technology, which can be applied in our businesses across all regions and sectors. The public sector needs to use AI to its best advantage. We want value for money for the investment being made, as well as adoption across the country. And then there is the question of trust, of people trusting AI systems – which is not easy.

This all involves long term investment, not something the UK is terribly good at. The more common practice is to provide five years’ funding and then expect business to cope on its own. Yet AI will remain with us. Other technologies will come along, which will command attention, but AI is going to be with us forever.

As systems get ever more intelligent and can do more and more things, a very close eye must be maintained on what this technology is doing to society and how it works in society. So governance will be very important as this sector develops.

The National AI Strategy is primarily concerned with Government activity over the next 10 years.

The National AI Strategy is primarily concerned with Government activity over the next 10 years.The National Strategy is primarily concerned with Government activity and what needs to be done in the next 10 years. Data Trusts were the top recommendation of the 2017 review and have become a key element in the Strategy. People should be able to trust the data that someone else gives them and share it with confidence. Lots of small companies believe that the playing field is not level in this regard because those who hold large amounts of data will have an overwhelming advantage. Hence our focus on data trusts. There has been some really good pilot work done by the Open Data Institute. With the Ada Lovelace Institute and the AI Council, I chaired a study looking at legal mechanisms for data stewardship. The Royal Society was also involved and its chief executive, being a lawyer by training, took a great interest in the subject. This is becoming a very hot topic. Data is, after all, the foundation of everything we do in AI, whether machine learning or deep learning. Then there is the challenge of driving adoption across different sectors, such as healthcare, tech-nation, AI startups, big science and purchasing.

In the international arena, the UK is part of the Global Partnership on AI (GPAI) which is a way to reach most of the world except for China. This is led by Canada and France, and the UK is heavily involved in the working groups, which gives us a way to link with many different countries. We have signed an agreement to collaborate with the USA and there are others in the pipeline.

In our initial AI review, the Alan Turing Institute was recognised as the national institute for data science and AI – and it could become a world leader in this area. Working with the Turing Institute, UKRI has funded a number of fellowships to recruit and retain AI researchers in the UK.

At every level

We are trying to introduce AI at every level, not just Higher Education. That means skills for everybody – apprenticeships, schools, etc. The aim is that anyone can get access to AI skills whoever they are, whatever their context, whatever their background. In his spring statement, the Chancellor announced funding for another 2000 AI scholarships for MSc conversion courses which take people from non-science subjects into AI. These have been incredibly successful in promoting diversity in AI because the scholarships are targeted at under-represented groups such as women, the disabled and ethnic minorities. For me, this is the most important feature of this funding. It is so important that we increase diversity across all aspects of AI. What is not diverse is not ethical.